Good, bad, regulated AI. What is important to know about the first act regulating the use of artificial intelligence?

The world has entered the era of artificial intelligence, confidently “opening the door” to every area of our lives and changing the ways in which we are accustomed to work, create and support. Developers and tech giants are constantly working on new products, and the “AI based” label guarantees a significant influx of interest and investment in your business solutions.

But with great power comes great responsibility. On the one hand, AI is an assistant in capable hands that can improve the quality of life, increase productivity, promote innovation and competitiveness, but it can also pose risks to human rights, democracy, security and the market. Therefore, a balance is required between promoting the development of AI and ensuring its responsible use.

Using the term “artificial intelligence” in the name of a product or service has long ceased to be something unusual. Today, it is almost a prerequisite for achieving success and recognition of modernity. Over the past year, AI technologies have become more accessible to the masses, as demonstrated by the example of ChatGPT, and their use requires less and less technical training.

Many tech giants, in particular Microsoft, are developing intuitive tools for interacting with powerful AI models. For example, Azure AI Studio is a platform for deploying popular AI models, and Azure ML Studio is a platform for machine learning. Thanks to such solutions, companies can shift the focus from administration and deployment to direct interaction with the platform and its settings. This not only reduces the time for implementing solutions, but also increases the level of security and information protection.”

We have researched the issue and want to tell you what risks unregulated use of AI technologies poses for businesses and people, as well as the first European law controlling the use of AI technologies.

Risks of unregulated use of AI for business

Risks for business

- Inaccurate decisions or forecasts due to algorithm errors

AI algorithms can make mistakes or make decisions based on inaccurate data. This can lead to painful financial losses, unsuccessful strategic decisions, or even reputational risks. For example, inaccurate forecasting of demand for a certain SKU can cost a retailer significant financial risks and potential write-offs. How to avoid that? Accumulate historical data that will help make forecasts more accurate, test and use proven systems, such as SMART Demand Forecast from SMART business. - Cybersecurity

AI can be used to carry out complex cyberattacks, which can compromise the infrastructure, data, and resources of both businesses and government agencies in any country. Of course, this can be avoided if you take care of the comprehensive protection of your business and regularly remind employees about security hygiene. - Legal and ethical concerns

Using AI technologies may violate privacy and data protection laws and regulations (GDPR, CCPA, VCDPA, SOC, etc.), especially if the data is collected or used without proper permission. This may result in partial or complete loss of customer trust, reputational risks, and even legal sanctions. Therefore, you should use enterprise and secure versions of generative models, such as Azure OpenAI. - Dependence on technology

Overuse of AI tools can reduce employees’ ability to think critically and make decisions, which can negatively affect the agility and adaptability of the business. In addition, big brother Google is watching you, because it has enough tools to check your content to determine the percentage of AI involvement. Do not anger Google, because you will be punished as a precedent agency, when excessive AI usage to generate thousands of posts was identified by the corporation and penalized.

Using AI, for example, within the Microsoft ecosystem, allows companies to fully control work with corporate and confidential data, and also provides the ability to regulate the results. Such advantages are critical for business, because they help minimize the risks associated with data leakage and unauthorized access.

What we see now is only the beginning of a large-scale history of AI development. Talking to customers, we see that everyone, without exception, is considering the great prospects of using AI in their business processes. Some companies are already actively implementing these technologies, while others are still looking for optimal solutions.

Risks for humans

- Violation of privacy. AI technologies can be used for mass surveillance and analysis of personal data without people’s consent, which violates privacy and confidentiality.

- Discrimination and bias. AI algorithms can reinforce existing social prejudices against certain groups of people. This can lead to discrimination based on age, race, gender or other characteristics. No thanks.

- Loss of jobs. Perhaps humanity’s greatest fear is the possibility of competition with AI. Automation of processes may eventually mean job cuts in areas where humans can be painlessly replaced by machines.

- Psychological impact. Constant control and monitoring with artificial intelligence tools can cause stress and a sense of mistrust in people. And excessive passion for technology reduces the level of social interaction and causes a feeling of isolation from the outside world. What’s too much is no good.

The risks and their potential consequences seem to be a strong enough argument for governments of the world to initiate the process of regulating generative AI technologies at the state level. After all, only regulation and fixed moral standards can ensure a balance between technological progress and the protection of human and business rights.

Where do we start?

The path to regulation of generative AI

Back in December 2023, politicians and ministers from the EU countries reached agreement on the main contentious points of the law. The main objectives that drove the need for an EU law on artificial intelligence can be summarized in three main points:

- Trust. To ensure that European citizens can trust AI technologies and their practical applications.

- Risk management. To create a framework for managing potential risks throughout the lifecycle of AI-based systems, especially those classified as high-risk.

- Streamlining. To ensure uniform rules for AI systems in the EU, ensuring consistency of standards across Member States.

However, the green light for further adoption of the act was not guaranteed, with some European heavyweights resisting certain parts of the agreement until the last days.

The main opponent was France, which, together with Germany and Italy, asked for a softer regulation regime for powerful AI models such as OpenAI’s GPT-4, which supports general-purpose AI systems such as ChatGPT and Bard. This position of the three largest European economies was due to concerns about clipping the wings of promising European startups such as Mistral AI and Aleph Alpha, which could challenge American companies in the industry. However, the European Parliament unanimously demanded strict rules for all models without exceptions.

Nevertheless, on May 21, the EU Council finally approved the Artificial Intelligence Act (AI Act), which was voted on by the European Parliament on March 13. The main goal of the regulation is to protect fundamental rights, democracy, the rule of law and environmental sustainability from the risks that AI can cause.

The European example of the law on artificial intelligence (AI Act) is already being called a benchmark in the field of technology regulation and is predicted to become a model and working template for legislators around the world. The law applies to all sectors of the economy, except for the military, and covers all types of artificial intelligence.

Key ideas of the Artificial Intelligence Act

The new rules will have serious consequences for any natural or legal person developing, using or selling artificial intelligence systems in the EU. Thus, the adoption of the law has two main goals:

1) to promote the development of artificial intelligence, investment and innovation in this area through clear legal regulation,

2) to eliminate or minimize the risks associated with the use of AI technologies.

The European Parliament notes that the AI Act rules prohibit the use of faces from the Internet and video surveillance to create facial recognition databases. It will also prohibit the manipulation of behavior, vulnerability of people and the recognition of emotions using artificial intelligence models.

Of importance, the Artificial Intelligence Act also imposes strict restrictions on general-purpose generative AI systems. These include requirements to comply with EU copyright law, disclose how models learn, conduct regular testing, and adequately enforce cybersecurity.

Of course, the restrictions won’t kick in immediately, but only 12 months after the law comes into force. And even then, commercially available generative models like OpenAI’s ChatGPT, Google’s Gemini, and Microsoft’s Copilot will have a 36-month transition period to bring their technology into compliance.

What will change for businesses and the government?

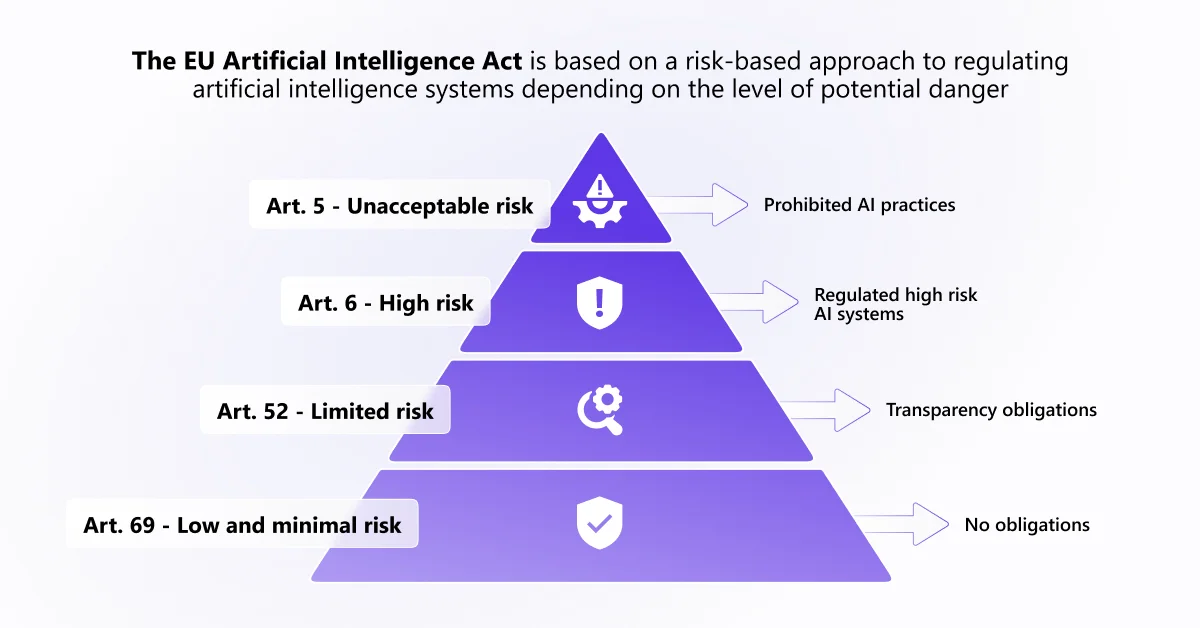

The Artificial Intelligence Act is based on a risk-based approach that distinguishes between the main categories of artificial intelligence systems based on the likelihood of causing harm and the potential level of its severity:

- Unacceptable risk. The prohibited generative AI systems include models with an unacceptable level of risk to human safety, rights, or fundamental freedoms that can:

- manipulate the behavior of people or vulnerable groups,

- rank people socially based on behavior, socioeconomic status, or personal characteristics,

- perform biometric identification and categorization of people, facial recognition.

- High risk. Such systems require strict regulation and oversight throughout their entire life cycle. For example, these are models that can be used in:

- critical infrastructure (transport),

- education and training, as well as access to it; employment,

- healthcare (AI in surgery using robotic technologies),

- provision of private and public services (credit scoring),

- law enforcement that may violate fundamental human rights,

- migration management, asylum, and border control,

- the administration of justice and democratic processes.

- General Purpose Artificial Intelligence (GPAI) systems include, among others, Copilot, ChatGPT, and Gemini. These systems may pose systemic risks and are subject to a rigorous assessment process. Additional regulations will now also include compliance with EU transparency and copyright requirements, and the prevention of illegal content generation. AI-generated content must be additionally labeled, and the audience informed about it. Marketers, take note!

- Limited Risk. AI systems in this category still require certain safeguards, such as warnings about use, but the regulatory requirements for these systems are less stringent. For example, AI-powered customer service chatbots or emotion detection and deep fake content generation systems.

- Minimal Risk. Such generative AI models are subject to the slightest regulatory burden. This includes, for example, basic email filters that classify messages as spam.

New regulators

The EU Commission now has the full power to fine companies for violating the law up to €35 million or 7% of the company’s annual global revenue. A lower scale of fines is provided for small and medium-sized enterprises, taking into account their interests and economic viability.

The law will be monitored by a new AI Office at EU level. This office will have the ability to evaluate general-purpose AI models (GPAI) and assist national authorities in overseeing the market for high-risk generative AI systems. A European Council on Artificial Intelligence has also been created, consisting of representatives of EU Member States and responsible for providing advice, opinions and recommendations.

What should businesses do? Conclusions and recommendations

If you are a supplier, developer, importer, distributor, or an individual to whom the AI systems apply, you need to ensure that your AI practices comply with the new requirements of the law. So, here are some practical steps you can take now:

Assess your risks. Ensure that your organization has a comprehensive risk management system in place to meet the requirements of the AI Act and apply the relevant requirements for data quality, technical documentation, human oversight, stakeholder engagement, etc.

Transparency and understandability of AI systems. Inform users and other stakeholders about the use of AI, its capabilities and limitations, and provide them with the ability to appeal and correct AI-based decisions.

Raise awareness. Disseminate information and educate your employees about the benefits and risks of AI.

Build ethical standards. Promote sustainable and ethical use of AI by integrating it into your organization’s strategy.

Keep your finger on the pulse. Keep an eye on new regulatory developments to anticipate their impact on your business and ensure timely compliance.

The AI Act is part of the new European digital strategy, which covers other aspects of the digital world in addition to AI. Like the GDPR, CCPA and other regulations, the Act will apply to all companies planning to enter the European market. It aims to create an AI ecosystem that is aligned with European values and rules and promotes innovation and competitiveness.

As partners of Microsoft, we are monitoring developments and promoting the ethical distribution and use of generative AI, which is becoming an integral part of both Microsoft and SMART business solutions, applications and services.

What is Microsoft’s position on creating a governance and regulation model for AI technology in Europe? Microsoft is actively involved in the discussions on AI regulation, providing proposals based on its experience with customers and its own Responsible AI program.

Microsoft has expanded its five-point plan, which it first presented in 2019, to show how it aligns with the EU AI Act discussions. The plan includes principles such as fairness, reliability and safety, privacy and security, inclusiveness, transparency, and accountability.

In its official blog, Microsoft states that it supports a regulatory regime in Europe that effectively addresses security issues and protects fundamental rights, while continuing to promote innovation that will ensure Europe’s global competitiveness.

The corporation has committed to implementing the NIST AI risk management framework and other international security standards that will be specified in the AI Act.

All products in the SMART business portfolio meet the requirements of both local and international standards and regulations, which mitigate customers’ risks and help manage business processes safely and legally. We are always ready to become your reliable IT partner and help with full-cycle implementation and support of:

- ERP for business process automation,

- HR and Payroll processes,

- Human capital management,

- Internal corporate portal,

- Microsoft 365 and Copilot for Microsoft 365,

- Microsoft 365 Adoption Programs,

- Microsoft Azure,

- Demand forecasting,

- Comprehensive protection of the company’s IT security,

- Electronic document management systems,

- Warehouse management systems.